Everything that is posted online on social media platforms such as Instagram, YouTube, Twitter, and Facebook can be, and frequently is collected, sorted, and inserted into algorithms designed to sort users into specific categories. This technology allows corporations and governments to make judgements based on extrapolated data and to place users into specific categories based on lifestyle, interests, socio-economic status, sexual orientation, and other preselected categories.

Not only can algorithms make false assumptions about people based on extrapolated data, but they can also recreate and reinforce biases and stereotypes based on the information that is supplied to them. Some argue that machine learning and algorithms are objective calculation tools, they are only as good as the human who programs them and the types of data that they are supplied. But the algorithms themselves introduce biases by selecting proxies for things like socio-economic status.

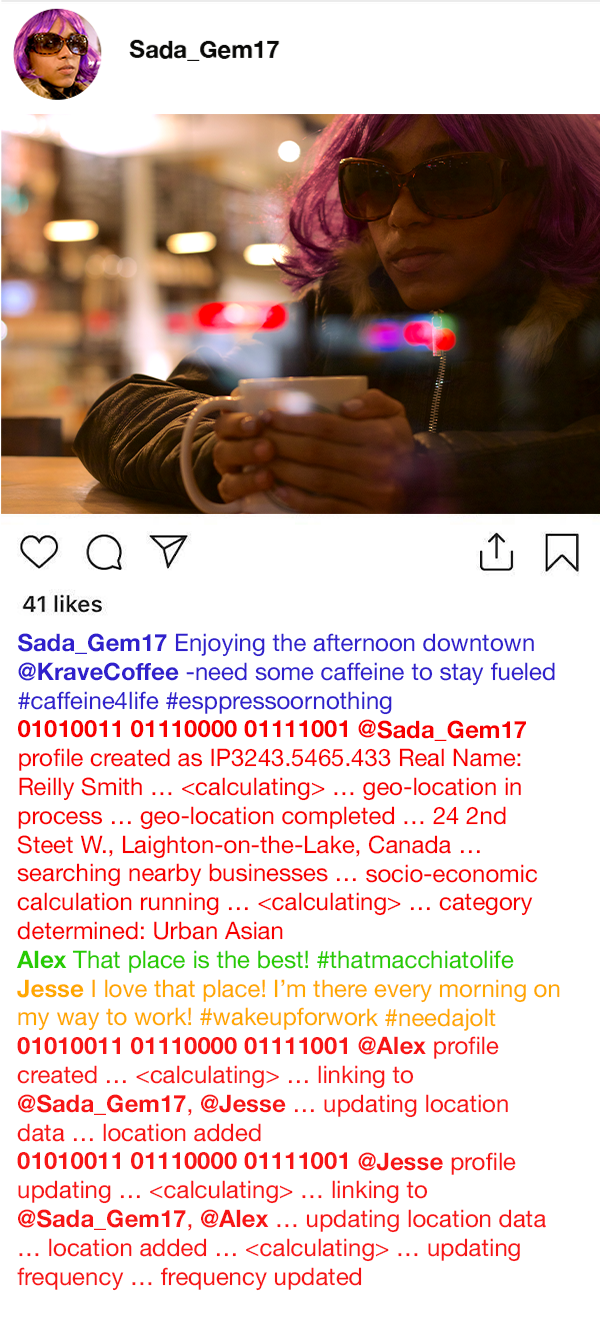

For example, a selfie at a favourite coffee shop (#espressoislife #deathbeforedecaf) will create a spiderweb of categorical associations based on numerous data points from that single post. Location data will be used to triangulate your location when you posted the selfie, which will be used to make several assumptions about you: socio-economic status (location, cost/clientele of the coffee shop, surrounding shops, etc.). Already, based purely on location data (based on either wifi or geolocation), numerous calculations can be made about the poster’s socio-economic status, shopping preferences, mode of transportation, and more.

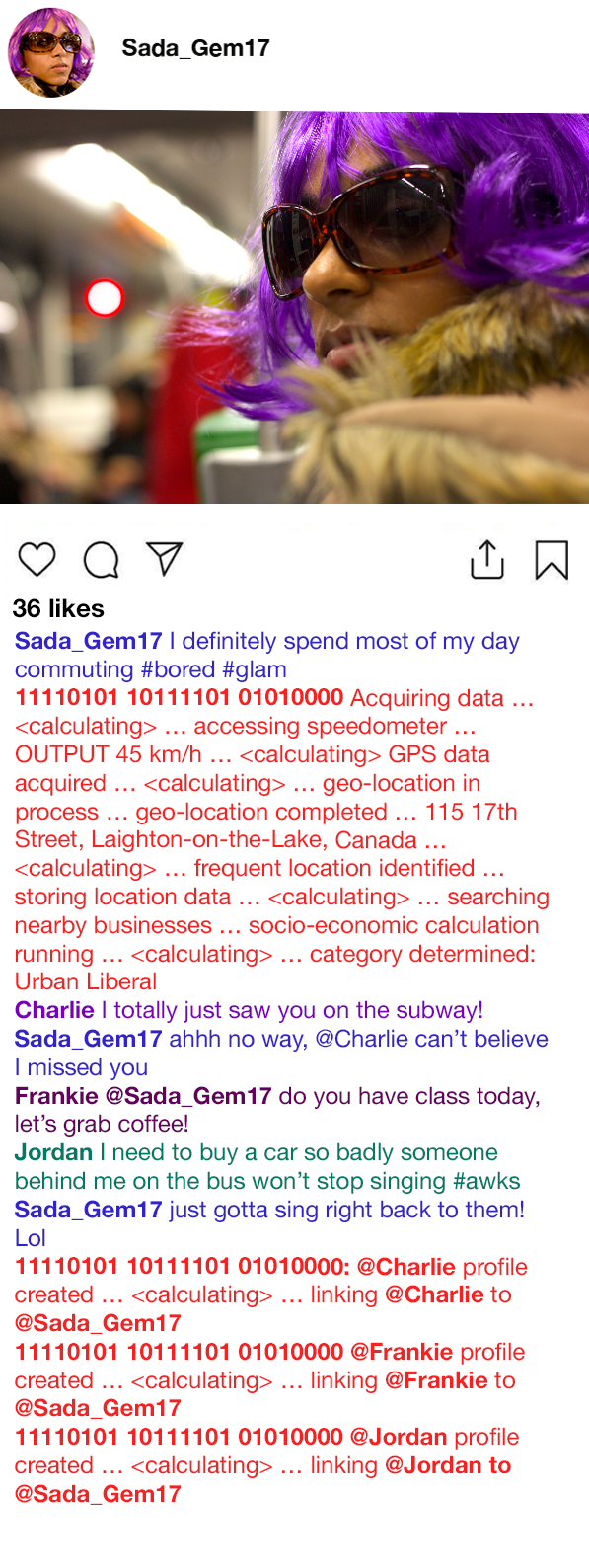

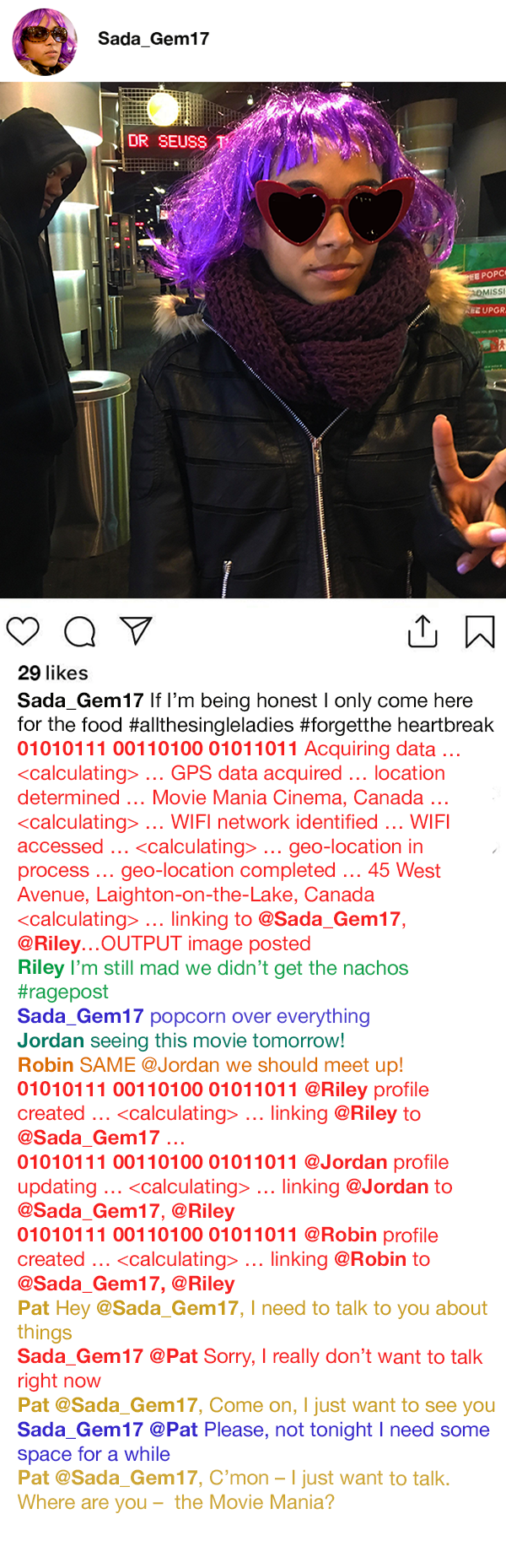

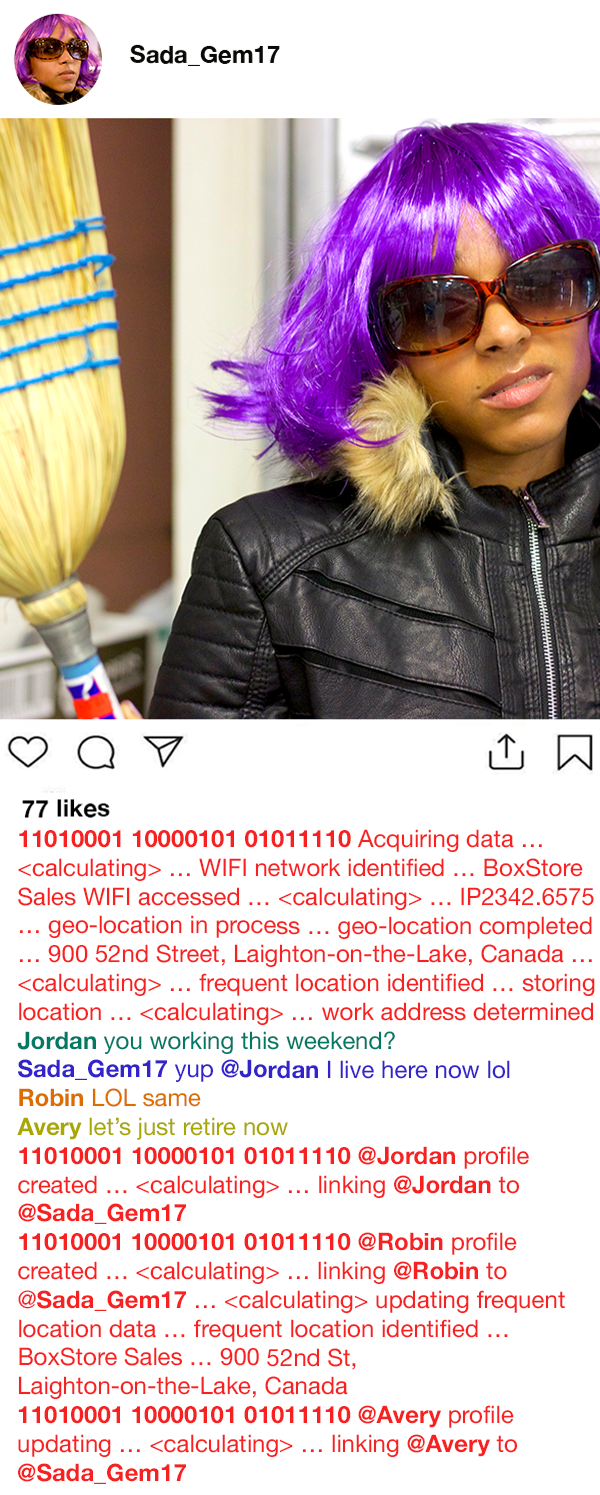

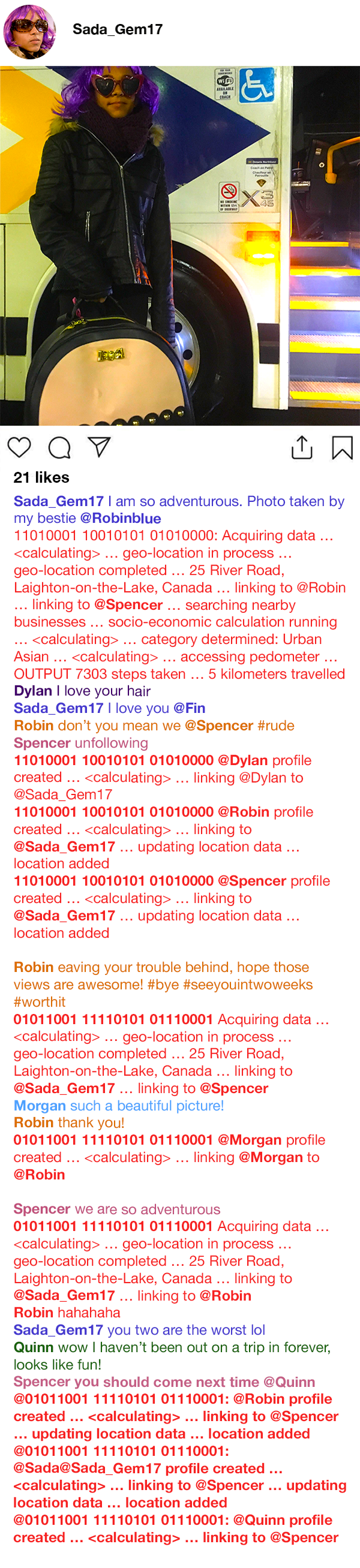

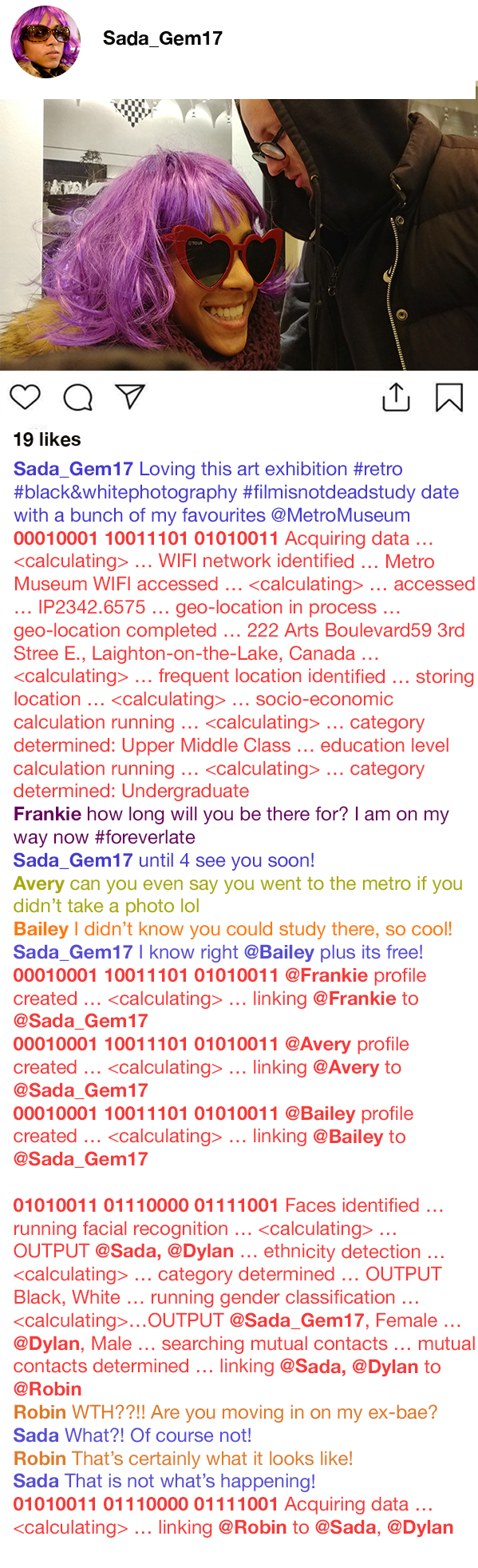

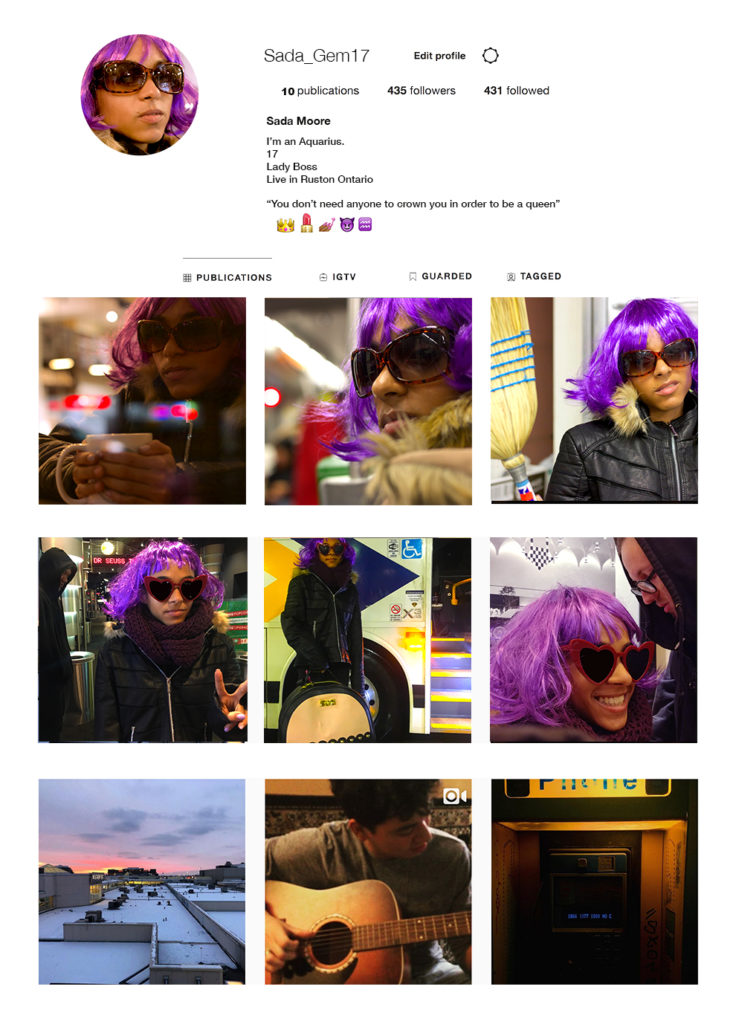

eQuality has created a mock social media page in order to illustrate the ways in which data are collected, and how those data are used by algorithms to make judgements about internet users in ways that they do not suspect or realize. Although the page eQ has created is fictitious, it highlights examples of algorithmic assumptions reported in real world situations. If you look at Sada’s profile, what could you assume from their posts?

By exploring Sada’s posts, you can see how algorithms can collect and utilize your data without you even knowing where or how they are operating. It’s not only Sada’s information that is being gathered either – algorithms can link people together via interest, relationships, geographical location, and even perceived race categories.

Interested in our Invisible Machine Lesson Plan, please follow the link here.